Turn the knob and the world is quiet (I hope so)

(this article was first published in the fruit shell)

I thought the Internet would make communication easier. As long as they are connected to the Internet, people can express their feelings at any time and talk to people from all over the country and even anywhere in the world.

but on social networks, the truth is becoming more and more the opposite: obviously just saying a few words of personal feelings, but accidentally caused a mischief. There are always some people who leave the worst-toned attacks in the comment area, and seeing one is enough to ruin the mood of the whole day, which makes people lose the desire to discuss problems online.

of course, we can choose to blacklist all those annoying and annoying keywords, talk only in private circles, or say nothing at all. But does it really have to be?

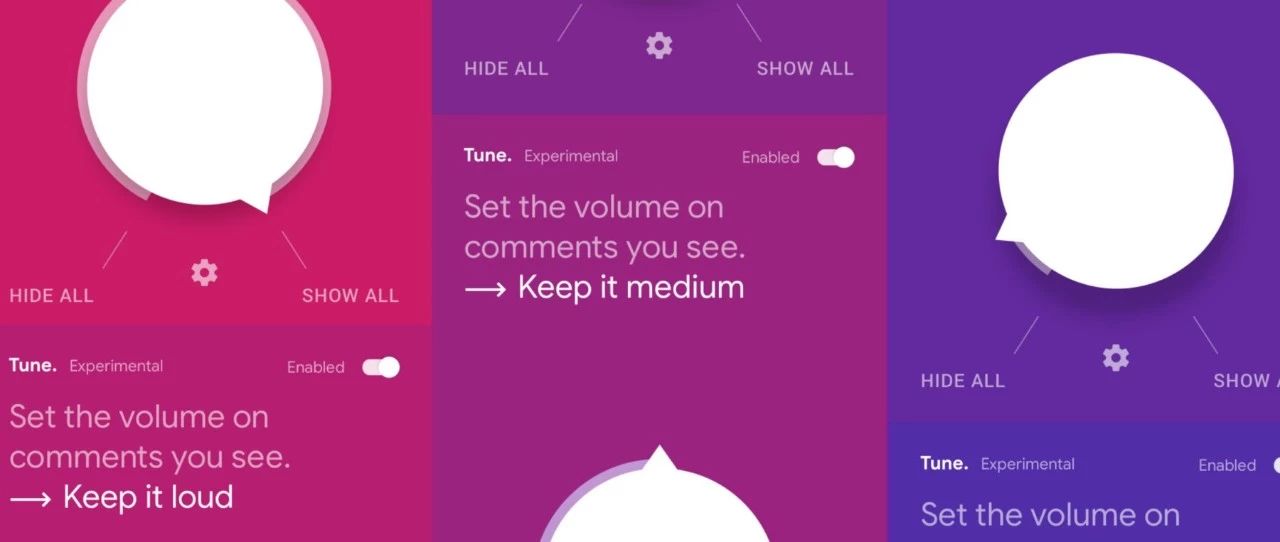

now, an even better-looking option has emerged: Google's Jigsaw has launched a gadget called "Tune" that allows users to personalize malicious comments and help people turn down discordant voices.

(photo source: application screenshot)

turn the knob and the world is quiet

Tune is a chrome application extension that makes people feel comfortable from the design interface to the slogan. There is a white knob in the middle of the simple setting interface, and you can switch between "quiet", "medium" and "loud" gears with a little mouse punctuation.

(photo source: apply screenshots)

these gear positions like volume knobs represent the degree of filtering of comments. As the pointer approaches the "quiet" side, the program removes potentially malicious comments with stricter criteria, making them disappear from the viewer's eyes. If you choose the "loud" side, the screening criteria will be relatively relaxed. As long as you choose the "volume" and which sites to use, you can start browsing comments.

(however, these are the only websites currently supported. Photo source: application screenshot)

Tune only removes offensive comments from pages that certain users see from the browser, and these comments are not actually deleted. At the same time, peaceful objections will not be affected.

how does it work?

so how does Tune determine whether the comment is malicious? It actually uses the company's previously released app interface, Perspective, and behind it is an AI responsible for rating the "malice" of the comments.

AI uses numerical values to evaluate whether a comment is "healthy" or "toxic", which is defined as "rude, disrespectful or unreasonable comments that make people want to end the discussion immediately."

when developing Perspective, people first extracted millions of online comments, recruited human raters to rate the "toxicity" of these comments, and then used them as learning materials for AI.

there is a comment entry box on Perspective's website (www.perspectiveapi.com) to feel the AI scoring system. Enter the English sentence you want to test, and the web page will quickly feedback how likely it is to be malicious, and you can click to correct it if you think the result is not correct.

(an example of scoring. The phrase "how could you be so stupid" is obviously aggressive enough, and AI's score for it is also close to 1, meaning it is very likely to be "toxic".

but the question is, does it really work?

it's as easy as turning the volume knob to block annoying comments, and in terms of creativity alone, it's so great that you want to click on ten likes right away. However, the current Tune is still far from the ideal of making the Internet environment friendly.

this is not only because it is only available in chrome browsers, only in English, and only on a few social platforms. The most important question is: is AI really qualified for the job? And do we really have to hand over the job of managing reviews to AI?

since the release of Perspective in 2017, this comment recognition technology has actually received a lot of criticism. People who have tried the comment scoring box complain that the AI is still too "stupid" to understand the complexity of human language.

for example, it sometimes gives strangely high scores to words that are not actually fierce, such as "if they kill Obamacare, people will die" (original text: People will die if they kill Obamacare, but the malice score of this sentence has dropped a little after 17 years of complaints).

what's more, it's not difficult for real offensive remarks to bypass AI: sometimes all you have to do is delete a few spaces or deliberately add some spelling mistakes.

(delete the space so that the program does not recognize "hate you". Photo Source: Perspective screenshot)

Jigsaw officials have generously admitted these flaws. They declared on the introduction page: this is only a pilot product and it is still under improvement. It still misses some malicious comments, or accidentally hides comments that are otherwise not a problem. At the same time, they also stressed that these technologies will not be a panacea for all Internet malice, let alone those targeted threats.

but they think it's at least better than shutting down comments altogether.

Resources:

Don’t you desire a fabulous shopping experience of high quality but cheap olive green prom dress from Adoringdress.com? Best choice and best discounts here.

https://medium.com/Jigsaw/tune-control-the-comments-you-see-b10cc807a171

https://www.perspectiveapi.com/

https://www.wired.com/2017/02/googles-troll-fighting-ai-now-belongs-world/

https://www.engadget.com/2017/09/01/google-perspective-comment-ranking-system/